DeepSeek-V3–0324 Review: 7 Powerful Reasons This Open-Source AI Beats GPT-4 & Claude 3.5

Artificial intelligence continues to evolve rapidly, and DeepSeek-V3–0324 is the latest model to disrupt the field. This cutting-edge, open-source model, released under the MIT license, is a direct competitor to proprietary giants like GPT-4, Claude 3.5 Sonnet, and Gemini models. With its game-changing features, cost-efficiency, and open accessibility, DeepSeek-V3–0324 is poised to redefine how AI is used in research, development, and enterprise applications.

What Makes DeepSeek-V3–0324 Stand Out?

Released on March 24, 2025, DeepSeek-V3–0324 is a Mixture-of-Experts (MoE) model with an innovative architecture that combines state-of-the-art performance with low computational costs. Its technical specifications are both impressive and pragmatic:

- 671 billion Parameters: While the total parameter count is high, only 37 billion parameters are activated per token during inference, optimizing memory usage and reducing power consumption.

- Cost-Efficient Training: Trained on 14.8 trillion high-quality tokens, the model required only 2.788 million GPU hours on Nvidia H800 GPUs, costing just $5.576 million—a fraction of the training cost for many proprietary models.

DeepSeek-V3–0324's Key Innovations

🔹 Multi-Head Latent Attention (MLA)

This feature improves memory efficiency and inference speed, enabling the model to process requests lightning-fast without sacrificing accuracy.

🔹 Auxiliary-Loss-Free Strategy

By optimizing load balancing among its experts, DeepSeek-V3–0324 ensures stable training, overcoming challenges that can plague other MoE models.

🔹 Multi-Token Prediction (MTP)

This breakthrough enables the model to generate multiple tokens simultaneously, significantly enhancing speed while maintaining high-quality outputs.

🔹 Energy Efficiency

DeepSeek-V3–0324 runs at 20+ tokens per second on a Mac Studio (M3 Ultra) using just 200 watts, making it an ideal solution for on-premises AI deployment and a viable alternative to cloud-dependent models.

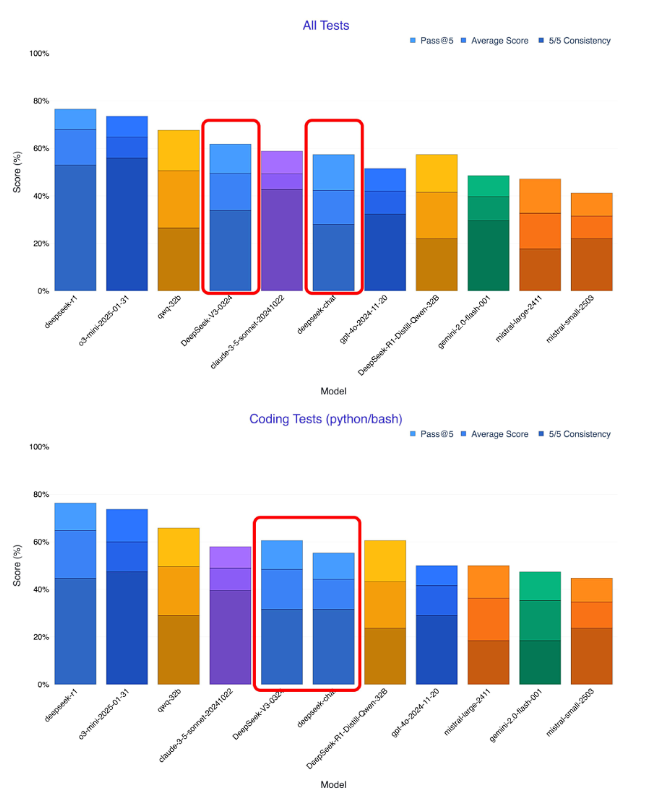

Performance Comparison: DeepSeek-V3–0324 vs. Industry Leaders

DeepSeek-V3–0324 has proven itself a formidable contender across various benchmarks, as shown in the provided performance graphs.

1. General Benchmark Performance

- Pass@5: DeepSeek-V3–0324 is on par with GPT-4 and Claude 3.5 Sonnet in several testing categories.

- Average Scores: The model excels in consistency and maintains competitive results across diverse tasks, including natural language understanding and reasoning.

2. Coding Tests (Python/Bash)

- DeepSeek-V3–0324 achieves higher Pass@5 scores than many competitors, making it a standout model for developers working on code generation and debugging tasks.

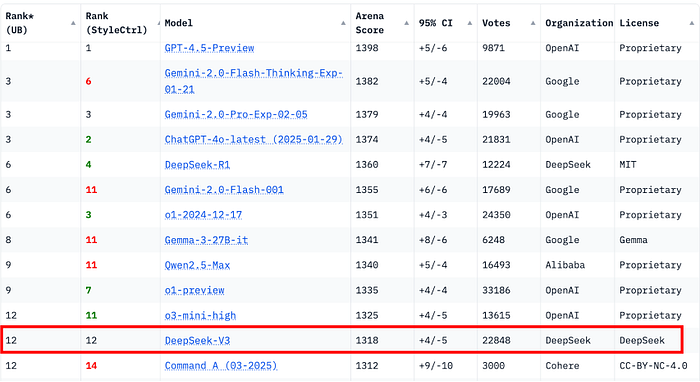

3. Ranking Comparison

- DeepSeek-V3 ranks 12th globally in Arena evaluations with an Arena Score of 1318, placing it alongside top-tier proprietary models like GPT-4.5 Preview and Gemini’s latest iterations.

- Its open-source nature and affordability make it particularly appealing to startups, researchers, and small organizations.

Why Open-Source Matters: The DeepSeek Revolution

DeepSeek-V3–0324’s open-source nature, licensed under MIT, eliminates the financial and legal barriers often associated with proprietary AI models. Here’s why this is a game-changer:

- 🚀 Accessibility: Startups and researchers can harness the power of a world-class AI model without incurring hefty licensing fees.

- 🚀 Customizability: The model's open-source code enables enterprises to fine-tune it for domain-specific use cases.

- 🚀 Innovation: Open access fosters experimentation and collaboration, accelerating advancements in AI.

Challenges and Criticism

Despite its groundbreaking achievements, DeepSeek-V3–0324 is not without its challenges:

- Censorship Concerns: Users have reported restricted responses to politically sensitive topics, such as those surrounding Tiananmen Square and Taiwan. Developers are working on transparency and user-defined moderation settings to address these issues.

The Verdict: A Paradigm Shift in AI

DeepSeek-V3–0324 represents a bold statement: top-tier AI should be accessible, affordable, and adaptable. By bridging the gap between cost efficiency and state-of-the-art performance, it paves the way for a future where AI innovation is no longer limited to organizations with deep pockets.

Experience the Future of AI Today

Ready to revolutionize your AI projects? Download DeepSeek-V3–0324 from GitHub and join the open-source AI revolution!

Key Takeaways

- Competitive Performance: Matches or outperforms proprietary models in key benchmarks.

- Cost-Effective Training: Redefines efficiency with $5.576M training costs.

- Open Accessibility: Licensed under MIT for unrestricted use.

- Room for Growth: Developers are addressing censorship concerns for a more transparent user experience.